Morfternight #97: Democracy, Physics, and Music.

The one with an eclectic selection.

👇 tl;dr

Today we reap the fruit of a week off work, with a photo from outside the city, a selection of links out of our usual areas, a new website and a relaxed chat about AI. Also, you have 24 hours left to vote in the translation poll.

🤩 Welcome to the 158 new Morfternighters who joined us this week.

I love having you here and hope you’ll enjoy reading Morfternight.

Share with your friends by clicking this button.

👋 Good Morfternight!

Hello from climate-challenged Vienna, where summer isn’t reading the room, and temperatures are back to 30º Celsius this week.

Last week I shared a poll asking you to vote for French, or Italian, as the language you want to see this newsletter translated into, and I sent out Morfternight a bit late - on Monday. Because of this, and since I'm on time this week, there's still one day left to vote. I'm leaving the poll here for you to make sure your voice is heard.

The race is incredibly tight, 52% in favor of French at the time of this writing, so every single vote counts. Don't miss your chance to shape the future of Morfternight!

Time to vote! Next issue will be translated into the winning language.

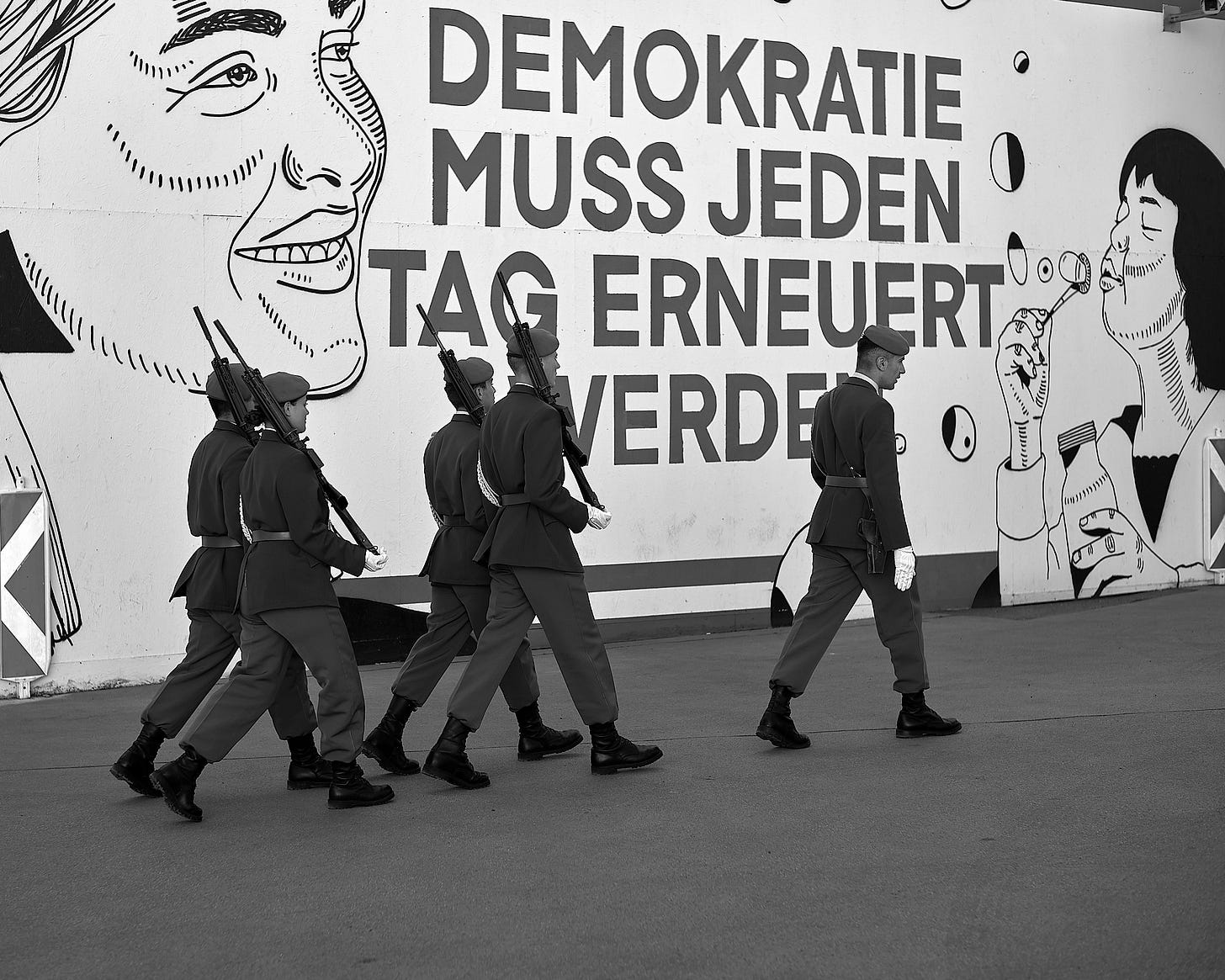

📷 Photo of the week

“Demokratie muss jeden Tag erneuert werden,” or, in English, “Democracy must be renewed every day,” is the slogan illustrating the barriers around the renovation of the Presidential Chancellery, the Hofburg Leopoldine wing in which the Austrian President lives and works. It was always a bit tongue-in-cheek to use such a strong statement to explain some building renovation, but last week, with those five soldiers parading in front of it, it briefly reached into the register of dark humor…

I took some time off this week, and on Thursday, we decided to drive to the Grüner See, a small but very charming lake in Styria, southwest of Vienna.

We’ll have to visit again next May or June, as the lake’s primary water source is snow melting in the spring, reaching up to 12 meters in depth. This week, after the hot summer we just had, it was barely two to three meters, and the waters had receded enough to split the lake into two separate bodies of water.

This is a view of the Messnerin, a 1,835-meter-tall mountain behind the lake. I hear the view from the top is incredible. I’ll have to get in better shape if I want to experience it, though, as there’s a 1,100-meter altitude gap between the last parking spot and the peak.

🗺️ Three places to visit

This is not the first, nor the last, mention of the fact that I was off this past week, but it's important that I mention it because it explains why my mind wasn't focused on the usual. As a consequence, I want to share with you three little gems that have nothing to do with Leadership, Product, AI, or Tech.

I hope you won't mind this little detour, or perhaps it'll even make you smile.

① I shared in Morfternight #41 a fascinating 1983 video series, “Fun to Imagine,” in which Richard Feynman explains science in a way that is fun and accessible to everyone. A few days ago my FYP1 presented me with excerpts of a lecture Professor Feynman delivered at Cornell University in 1964: “The Distinction of Past and Future.”

I tracked it down on YouTube, the usual go-to when something interesting pops up on TikTok, and discovered it was part of a series dubbed the “Messenger Lectures,” a yearly Cornell tradition where a guest speaker presents.

I was extremely pleased to realize that not only were these available on Youtube, but that Cornell University published them, along with synchronized transcripts here: Feynman's Messenger Lectures.

I haven’t watched them all yet, but “The Distinction of Past and Future” is exceptionally insightful and fun, so I can only recommend the series.

② Another throwback, this time to Morfternight #54 where I shared an episode of Leonard Bernstein’s Young People's Concerts.

I started revisiting the entire collection of more than 50 episodes, accessible on YouTube as a playlist: Leonard Bernstein - Young People’s Concerts (All Episodes)2.

Bernstein does with Music what Feynman does with Physics, making it accessible to everyone, demonstrating, as if it was necessary, that people who truly master a field can explain it to anyone, including children.

Listening to these two speak in such simple and clear terms is such a relief in a world full of jargon and acronyms thrown around by many to mask the lack of depth of their knowledge.

③ I think the first time I heard Nick Cave & The Bad Seeds was in the soundtrack of 'Until the End of the World,' the Wim Wenders movie.

It was about 30 years ago, and Nick Cave has remained a pillar of my music library since. I still have all the CDs, although I don’t have a way to listen to them anymore.

But I digress…

So naturally, this article caught my attention earlier this week: Nick Cave on Creativity, the Myth of Originality, and How to Find Your Voice.

Now, Maria Popova’s article is excellent, as is her whole site, The Marginalian.

The real gem hidden there, though, was the discovery of The Red Hand Files, a website that looks almost like a blog, except for the fact that each post is Nick Cave’s response to one (or several) letters from his fans and readers.

I started reading through the latest ones and found Cave’s writing and viewpoints both profoundly touching and remarkably balanced.

I think that when I grow old, I’d love to do that — receive questions from people and answer them publicly.

🌐 A new website at paolo.blog

Did I mention I was off this week?

I know, I know, I am joking. It’s the third time I mention this fact, but that’s not because I am so proud of taking a week off. Rather, it's to explain how I finally managed to do a number of things I wished I had time for.

One of these was refreshing the blog.

I have been going through this exercise relatively often over the past few years. It serves as both an exercise for me to stay up to date with the changes in the WordPress editor – which has evolved to the point where I can create almost anything I envision just with a mouse, without needing to type any code – and a testament to the fact that this evolution of the site editor has made the practice a lot more amusing than it used to be.

With this version, I still use a monochrome color palette, not only because I love it, but also because I find it accentuates my black and white photos beautifully.

I have tried to maximize the space large displays offer, while, of course, ensuring responsiveness on small mobile device screens. I also worked to make the text as readable as possible, despite a relatively dark theme, to complement the photos effectively.

I do have a light version of the theme ready to go; I just need to figure out how to allow visitors to toggle between dark and light modes.

The only area that is not complete is the Newsletter section. It receives copies of this newsletter after publication, and I am behind in transferring them over.

Who knows, in the near future, I might even migrate this newsletter entirely to the site, consolidating everything in one place.

If you visit the blog, which would make me very happy, please share any feedback you have or point out anything you find broken.

🤖 How do LLMs respond to questions? Insights from a Casual Conversation

In a casual chat recently, a dear friend brought up some pretty profound questions regarding Large Language Models (LLMs) that I found worth sharing with you all. Let's dive in to unravel the complexities of LLMs through a laid-back conversation.

Note: The conversation with my friend happened in french, in a messaging app, which means it wasn’t very organized. I leveraged ChatGPT to reorganize it and translate it. Then of course, I edited it manually.

Understanding the Core Functionality of LLMs

After watching Geoffrey Hinton's video (shared in Morfternight #94), I understood a bit about neural networks for image and text recognition. However, I'm having trouble understanding how an LLM can answer a question. I get that it can complete a sentence or phrase, but how does it choose the first word or sentence to start the response? It seems as if it is trained to complete questions with full answers, not just a word followed by another. What's your take on this?

You're right, it does seem a bit mysterious at first. In essence, an LLM doesn't know that you've asked a question or even what a question is. What it does know is that you've given it a text to complete recursively. It statistically selects the most probable word to start the response based on the input and continues to build on it until it identifies a "end of response" token, signaling it to stop. It's all about probabilities and statistical data at play here.

Delving Deeper into the Functionality

Hmm...so, statistically speaking, in the vast collection of human-written text, the text following a question is most often an answer? That doesn't quite feel intuitive, especially considering how we humans, including us, tend to make assertive statements rather than ask questions, right?

It's indeed a matter of large numbers. That's why the "responses" tend to converge towards the average, inheriting cultural biases and occasionally hallucinating. Essentially, the LLM responds with what closely resembles a probable answer to your query.

This 'probability' is variable: each request initiates with a random "seed" to prevent identical responses to the same question.

You can also adjust the "temperature" which dictates how much the LLM can deviate from the ideal choice for each token – a setting that accumulates since each chosen token is then used to predict the following one.

Behind the user-friendly façade of ChatGPT, these controls are not accessible but when you deal with LLMs more directly via the APIs they are, making it essential to craft your query carefully for desired results.

In other words, if you want better answers you need to make sure that the answer to your question is the most likely output. For a deeper insight, you might find this article quite illuminating: Prompt Engineering Guide on Generative AI & LLMs.

Addressing Complexities & Fears

To go back to the original question, and how does an LLM know how to answer a question, or that it is a question, or what is an answer, I think it’s a bit more complex than just imagining that statistically the word that follows a question is the first one of the answer.

In the gigantic multi-dimensional matrix where LLMs classify words and concepts, elements of a question are situated near those of a potential answer, and this proximity is a crucial factor in the LLMs choice of words.

But how does an LLM categorize and retrieve the correct elements? This part is quite scary, because we do not understand how it works. To use a great metaphor I recently read, the uncertainty surrounding their actual functionality places us in the shoes of cavemen discovering fire – utilizing it without fully grasping how it works.

This ignorance also brings up deeper questions about our intelligence – whether it's something fundamentally distinct or just a grander scale version of what these machines can do.

For You Page: the personalized feed generated by the Tik Tok algorithm.

If you prefer to read instead of watching the video recordings, The Leonard Bernstein Office website offers access to all the transcripts.