Morfternight #48: Electric Sheeps

The one where we talk to and about AIs.

🤩 We welcome five new Morfternighters who joined us last week! We love to have you here, and remember: like a smile, this newsletter can be shared with others without you losing anything.

📷 Photo of the week

Not an electric Sheep - More Photos

👋 Good Morfternight!

I am stretching time zones to their limits, but it’s still Wednesday in Poipu Kai!

I am back home for a whole five days before flying to Austin, TX, next Monday to attend NamesCon and meet my .blog teammates. If you live in Austin and feel like meeting for a coffee, tea, or any other drink, let me know (you can reply to this email.) I have a pretty tight schedule, as I am in town only three full days, but I would love to meet Morfternighters!

In the meantime, today I greet y’all1 from Vienna!

🤖 S’il vous plaît... dessine-moi un mouton…électrique!

“Please… draw me a[n electric] sheep!”

Over the past week, I felt like a cyber-Petit-Prince, asking a software program to draw for me. Let me step back, or this won't be very clear.

The third cohort of Pencil Pirates just started.

I participated in the first cohort back in April. I didn’t draw as much as I wished, but I met a few awesome people and learned how to use Procreate on an iPad to sketch a bit. At the end of May, I participated in the second cohort. I couldn’t be as present in the live calls as I was traveling, but I succeeded in publishing 30 atomic visuals over 30 days! For the third, I wanted to do something different.

You may have heard or read about DALL•E 2, a drawing AI.

It’s a software program that takes a typed sentence as input and produces images in return. This fascinating tool doesn’t create collages or juxtapositions of existing images. Even though it has been trained with gazillion existing drawings and photos, it produces new images that never existed before.

It’s also not truly intelligent; DALL•E doesn’t know what it is drawing.

My basic understanding is that it’s made of two primary parts. The first is a software program with the ability to analyze an English sentence and understand its meaning. The second part is another program that can analyze an image and understand what’s in it. DALL•E then starts generating random images in vast amounts, analyzing them, and comparing the result with the input sentence.

As it does that fast, it generates impressive results in just a few seconds.

🗣️ Talking to DALL•E

As the onboarding phase of Pencil Pirates began, a few exercises were suggested to practice the learnings.

What’s very intriguing is that there are no strict rules about how to write prompts. Every time I submit a sentence, the AI generates four images. I can ask for more variations with the same prompt, select one and ask for more variations inspired by that one, and even delete a part of an image and ask for it to be filled with a secondary prompt.

There are a few specific terms, like “digital art,” that lead to higher quality images, but you can type more or less anything, as long and detailed or short and vague as you wish, and see what comes out of it.

You can ask for drawings, paintings, photos, and 3D renders.

You can also mention a famous painter or a painting technique to give a specific style to your drawing.

Here, for instance, I typed:

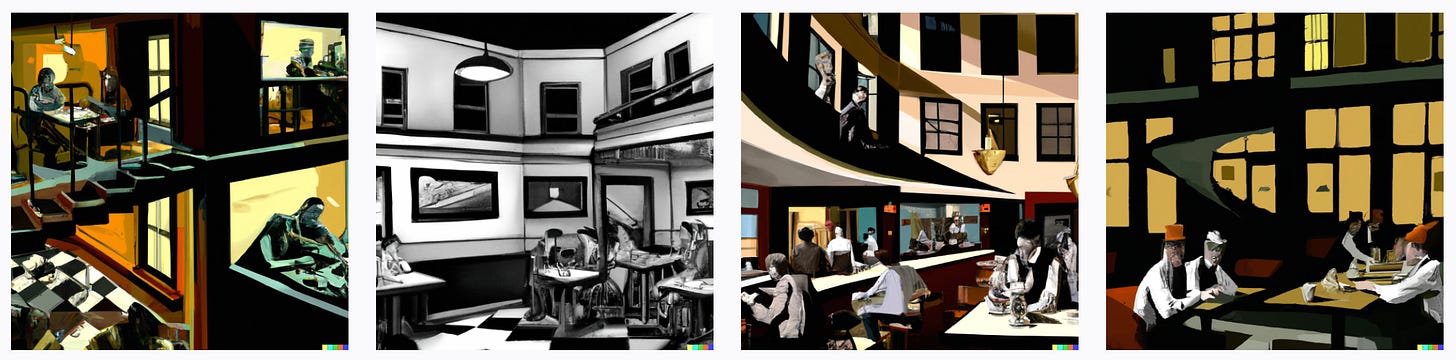

Edward Hopper's "Nighthawks" in the style of M.C. Escher, digital art.

DALL•E produced these four images:

I then picked the one on the left and asked for variations:

I love how the prompt is translated, in a few seconds, into images that I think share the atmospheres from the work of both painters.

As I am writing this, using the ideas bouncing in my head, I asked for “A painting of an android dreaming of an electric sheep by Edward Hopper, digital art.”

There is so much to explore that the Dallery.Gallery website published an 82-page PDF document exploring prompts.

🎨 My Pencil Pirates exercises

🪩 Talking to AIs: the skill of the XXI century

DALL•E is not the only experiment in the visual AI field.

And visual creation is not the only area AIs are assisting us with, of course. The controversial Github Copilot feature immediately comes to mind. As these tools are perfectioned, they’ll start building usable products. If the DALL•E experience taught me anything, it is how hard it is to guide the machine precisely by altering the input.

I am not referring to doomsday scenarios where ambiguous instructions would lead an AI to eradicate humanity, like a paperclip maximizer.

But we’ll need to learn to communicate with machines with extreme precision to generate usable reproducible results.

In the same way that cloud hosting has commoditized hardware and network infrastructure, removing the barrier to entry for new startups in the tech industry, AI execution will break the next one.

Soon, a single person with heightened product instincts and excellent AI conversational skills will be able to launch new products without any seed investment.

I am training for Texas! ;)